Published on SearchEngineJournal April 29, 2020 (Jason Barnard)

Learn what triggers video and image boxes, how click rate impacts positioning, what helps images and videos rank, and more from Bing’s Meenaz Merchant.

Meenaz Merchant’s official title is Principal Program Manager Lead, AI and Research, Bing.

He says simply: head of the multimedia team.

The first thing I learned is that the same team builds the algorithms for both images and videos.

That means understanding how to approach each gets a little simpler – they will have a similar approach and probably be looking at similar features in a similar manner.

They also run the camera search (reverse image search) algorithm. (Unfortunately, we didn’t have time to talk about that.)

What Triggers Video & Image Boxes?

Intent.

Obviously with very explicit intent such as “pictures of…” or “videos of..”, and more ambiguous explicitness (as it were) such as “show me a…”

But also implicit where the user probably wants and expects images or videos on the SERP – for example, movie stars.

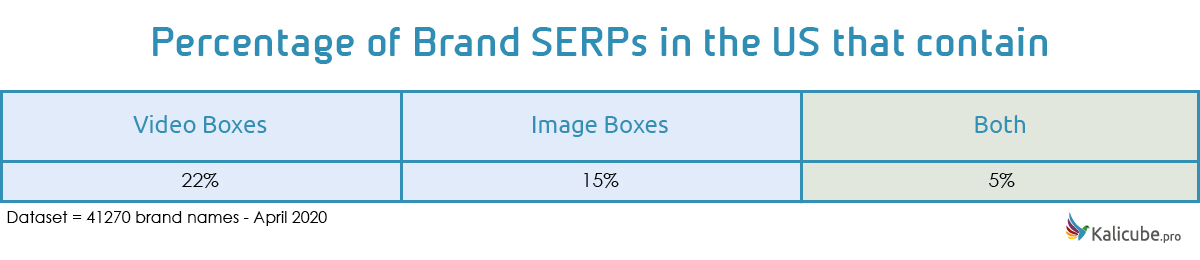

Do Both Appear Side by Side in SERPS Regularly?

Merchant mentions 10% of overlap where both video and images are relevant and helpful to satisfy the user’s intent.

So 10% of the time when they show one, they will also show the other.

From spot checks on Brand SERPs that I have collected at Kalicube.pro, it had seemed to me that there was a bias in the SERPs to tend toward an “either or” basis.

Merchant tells me this is not so.

I really should have checked my data beforehand. The crossover is quite extensive.

A good third of brands that have images on their SERP also have videos, and a good quarter that have videos also have images.

These are stats from Google SERPs, not Bing, but they nicely illustrate the doubling up Merchant mentions.

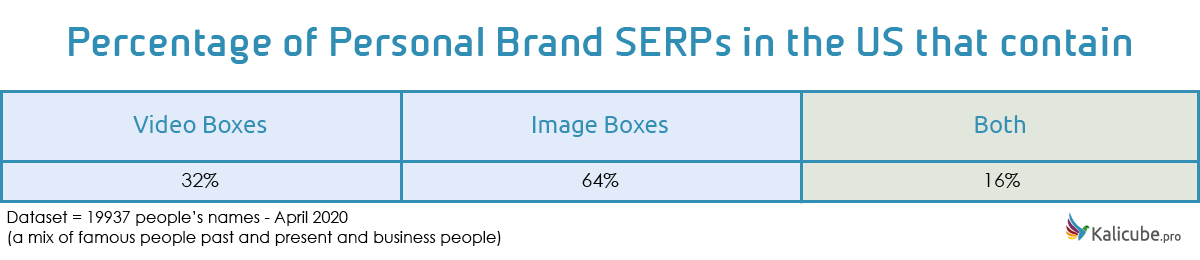

And that data supports what Frédéric Dubut said during the interview that kicked this series off, and that Merchant reiterates.

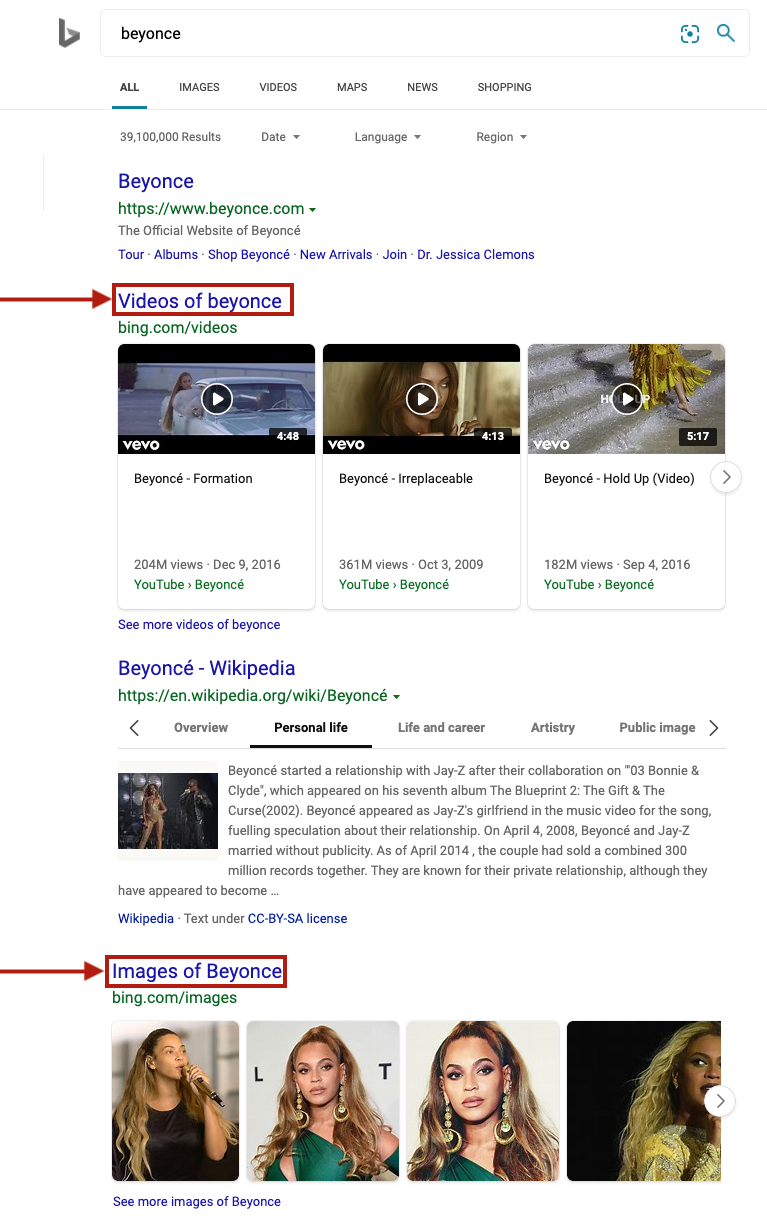

Some queries have very strong implicit intent for images and videos – famous people, especially in the entertainment sphere. Dubut mentions Beyoncé.

Click Rate Affects the Position Where These Rich Elements Appear

Merchant is clear – over time a higher click-through rate on one of these elements will push it up the SERP.

And looking again at the Beyonce example above, the videos ranking above the images make even more sense.

Can you distinguish the good clicks from the bad?

Invalid clicks hide in the noise of normal activity. Let AI catch the subtle signs of PPC click fraud that humans miss & improve your ROAS.

In the fifth episode in this series, Nathan Chalmers from the whole page team also states that user behavior on the SERP affects where rich elements are placed.

He also points out that they aggregate data and use machine learning rather than applying click data on a per-query or even per-intent basis.

This is some analysis I really need to do on Brand SERP data.

Not just which rich elements are present for brands and people, but also which are on the rise in rankings – i.e., more popular with searchers.

What Helps Images Rank?

Relevance is the single most important factor.

Merchant says, “Relevance – is this the right image for the query – trumps everything.”

They do want diversity, but won’t dilute the quality of the results in order to expand to different sources.

If one source gives multiple images that are deemed relevant, they will all rank.

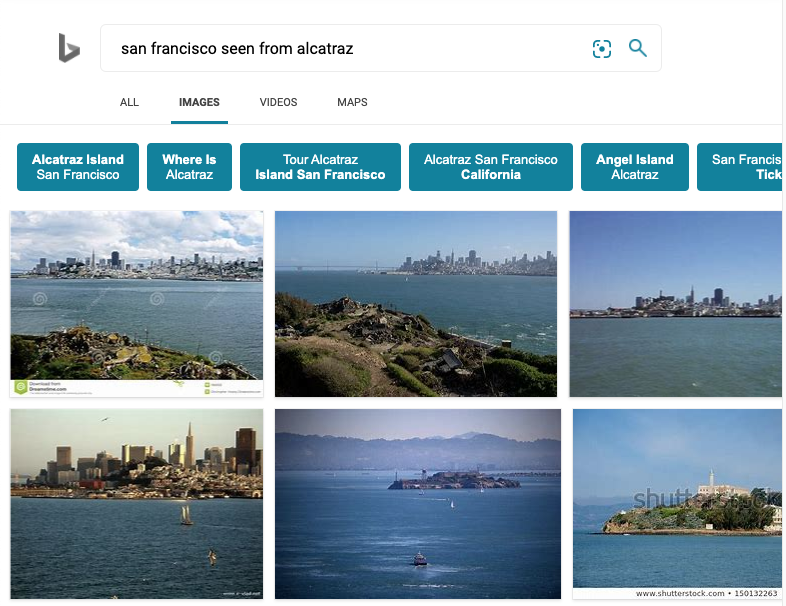

Merchant uses the example of a query for “San Francisco city from Alcatraz Island” which is very specific.

If they have an image that shows that view, that is the most relevant.

The best webpage with the best image SEO that contains an image that looks almost the same, but is actually taken from a different view – a picture of the city from Golden Gate bridge, for example – isn’t relevant and the algo will attempt to figure that out and filter that result out.

Evaluating relevancy depends on understanding what’s in the image.

They use the “traditional” signals:

- Alt tag.

- Title tag.

- File name.

- Caption.

- The content around the image.

But it turns out the core signal for relevancy is understanding what the image shows by analyzing using machine learning.

Progress in Machine Learning

As Fabrice Canel points out in episode 2 of this series, the progress Microsoft (and Google) are making using deep learning is exponential.

Their algorithms are improving at an exponential rate

For images, in particular, the last three years have been the “take-off.”

Bing’s ability to understand the content around the image to understand the context has improved, but also their ability to analyze the image itself and understand the contents.

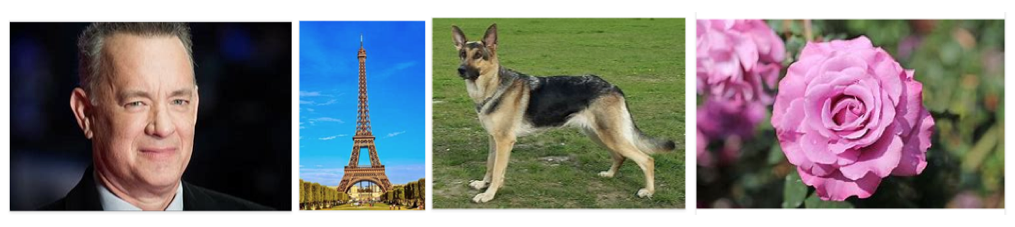

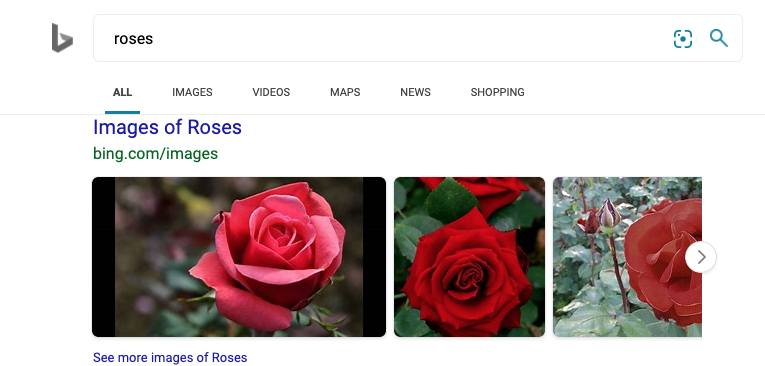

They started with a set of easily identifiable things for which they had boatloads of data (accurately tagged images).

Famous faces, landmarks, animals, flowers…

Tom Hanks, the Eiffel tower, German shepherd dogs, and roses will have been part of the training set three years ago.

They progressively expanded that out to less well-tagged datasets and incorporated the identification of specific elements in images… to the point that now they can be very subtle.

The example Merchant uses is understanding that a picture of a skyline is a city.

But more than that: which specific city? (i.e., San Francisco)

And yet further: that the picture is taken from a specific vantage point (i.e., San Francisco skyline taken from Alcatraz Island).

That highlights just how good this analysis is.

As users, we make use of these capabilities (and are starting to take them for granted) and yet forget as marketers just how smart these machines are.

And take that a step further – their confidence that they have correctly understood is increasing exponentially.

That means less and less reliance on all those traditional signals.

Alt tags are no longer the indicator they once were.

Even content around the image can become pretty much redundant if the machine is very confident it has correctly identified what the image shows.

They Analyze Every Single Image

I had always assumed that running images through their algos had a financial cost that meant that money would dictate they couldn’t analyze every image they collect.

Not true.

Merchant states that they analyze every single image and identify what it shows.

That means the clues they see in the filename, alt tags, titles, captions, and even the content around the image are simply corroboration of what the machine has understood.

So there is truly no longer any point in cheating on those aspects.

Bing will spot the cheat and ignore it.

But worse. Trust.

Taking alt tags as an example, Merchant states that the algorithm will learn which sites are trustworthy and will apply historical trust to ranking.

And that appears to confirm the experiences I have had when submitting pages on my sites to Bing and Google.

Both pages and images get indexed very very fast (seconds). Other sites I have tested take minutes, hours, or even days.

Historically built trust would appear to be a major factor here.

And Merchant suggests that building a reputation over time also applies to authority. They look at a lot of signals to evaluate authority.

Merchant focuses on quality content (images in this case), inbound links, and clicks from the SERP.

And that nicely illustrates just how important E-A-T is. They are looking at:

- Expertise (quality content).

- Authoritativeness (peer group support).

- Trust (audience appreciation via interaction on the SERP).

Makes sense.

Both authority and trust are re-evaluated on every search.

The algo is constantly micro-altering its perception of which domains are most trustworthy and authoritative.

Staying honest over time is crucial to your future success.

Image Boxes on the Core SERP Are Simply the Top Results From Image Vertical

Merchant talks about the image vertical and points out that they can generate as many results as the core blue links.

Getting to the top of the image results not only gives visibility there, but also on the core SERPs.

To get seen on the blue links SERP, you simply need to rank near the top for the images for a query that shows image boxes on the core SERP.

If the query is very image-centric, ranking top dozen will do since the image box is bigger.

What Helps Videos Rank?

The signals are similar to images.

First and foremost, relevancy…. but then also popularity, authority, trust, attractiveness (in that order, it seems).

What Triggers a Video Box on a Core SERP?

As with images, whether a video box gets shown on the core SERP depends on their relevancy to the explicit or implicit intent of the query.

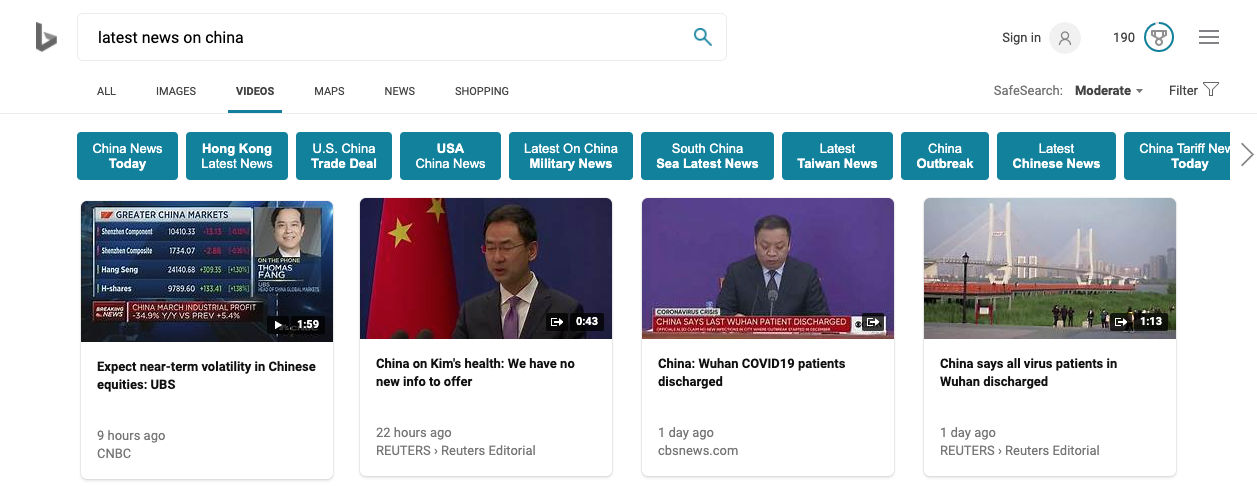

Merchant cites two examples of implicit intent that will trigger video: news and entertainment.

Is There a Domain / Platform Bias?

The platform doesn’t matter as much as producing relevant (that word again !), quality video that your audience engages with. Hosting it on YouTube, Twitter, Facebook, Vimeo are all options.

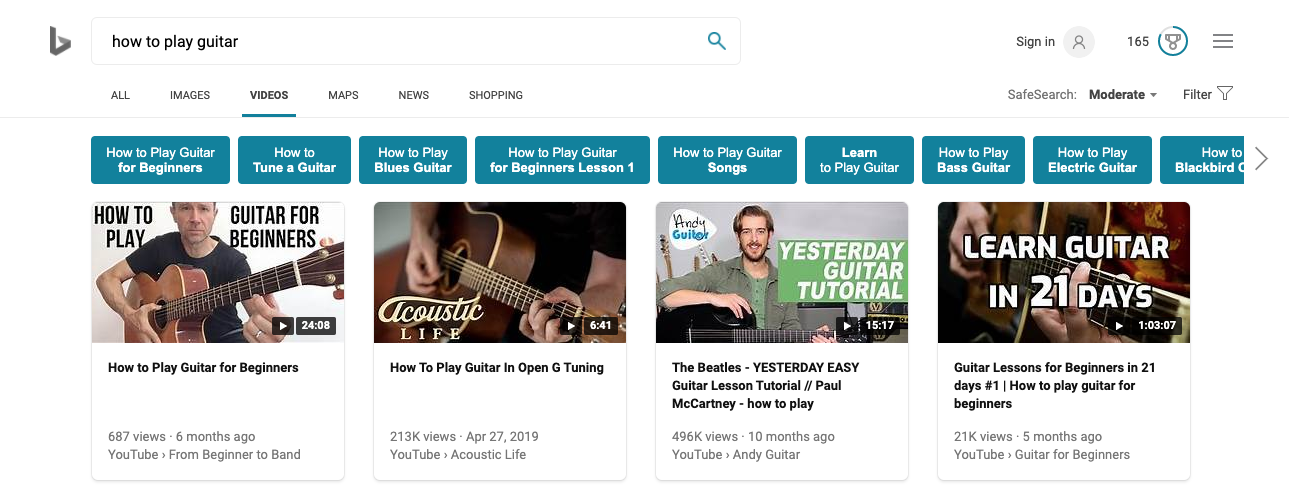

But think about the type of query and your vertical.

Different platforms dominate on different verticals.

YouTube is a great source for How-To, but news would tend to favor the BBC or another news site. The first non-YouTube result here was about 400th !!!!

The first non-YouTube result here was about 400th !!!!

On a query on a niche vertical / industry, the major platforms such as YouTube or the BBC have little advantage.

Authority in that niche can play a big rôle. So, a small, specialized website would be seen by Bing as a perfect source for a video on a very niche search query.

They would look for:

- Quality (aka being accurate and having decent production standards).

- Authority within that industry (a.k.a., peer group approval).

- Trust the domain has built over the years (a.k.a., providing results to Bing or Google that prove to be useful to their audience – a.k.a. SERP data).

Once again, all this looks suspiciously like E-A-T.

The Importance of E-A-T

As I move forward with writing up the interviews in the Bing Series, the more E-A-T stands out, and the more I am convinced that E-A-T a good way to approach creating and presenting content to rank.